Foundation Models (FMs) are large deep learning neural networks that are trained on large amounts of data. They are also know as “general purpose ai” or “GPAI” since they are designed to be general-purpose and serve as the starting point for future AI development.

Foundation vs Traditional Models

Common forms of foundation models include large language models (LLMs) or generative AI models. FMs differ from traditional ML models or “Narrow AI models” in that they are pretrained on a wide range of tasks and data, allowing them to perform a variety of tasks unlike the specialized models of the past.

How Foundation Models Work

Foundation models are trained on large datasets using self-supervised learning techniques and are designed to learn patterns and relationships in the data without the need for explicit labels. They generate outputs based on the patterns they learned during training. Structurally, foundational models are based on complex neural network architectures that consist of multiple layers of interconnected nodes. These nodes process the input data and generate the desired output. These architectures often include models such as Generative Adversarial Networks(GANs), transformers, and variational encoders.

Use Cases

The following are common use cases for foundation models in AI application development:

- Natural Language Processing (NLP)

- Computer Vision

- Speech Recognition

- Code Generation

- Image Generation

- Text Generation

- Human Engagement

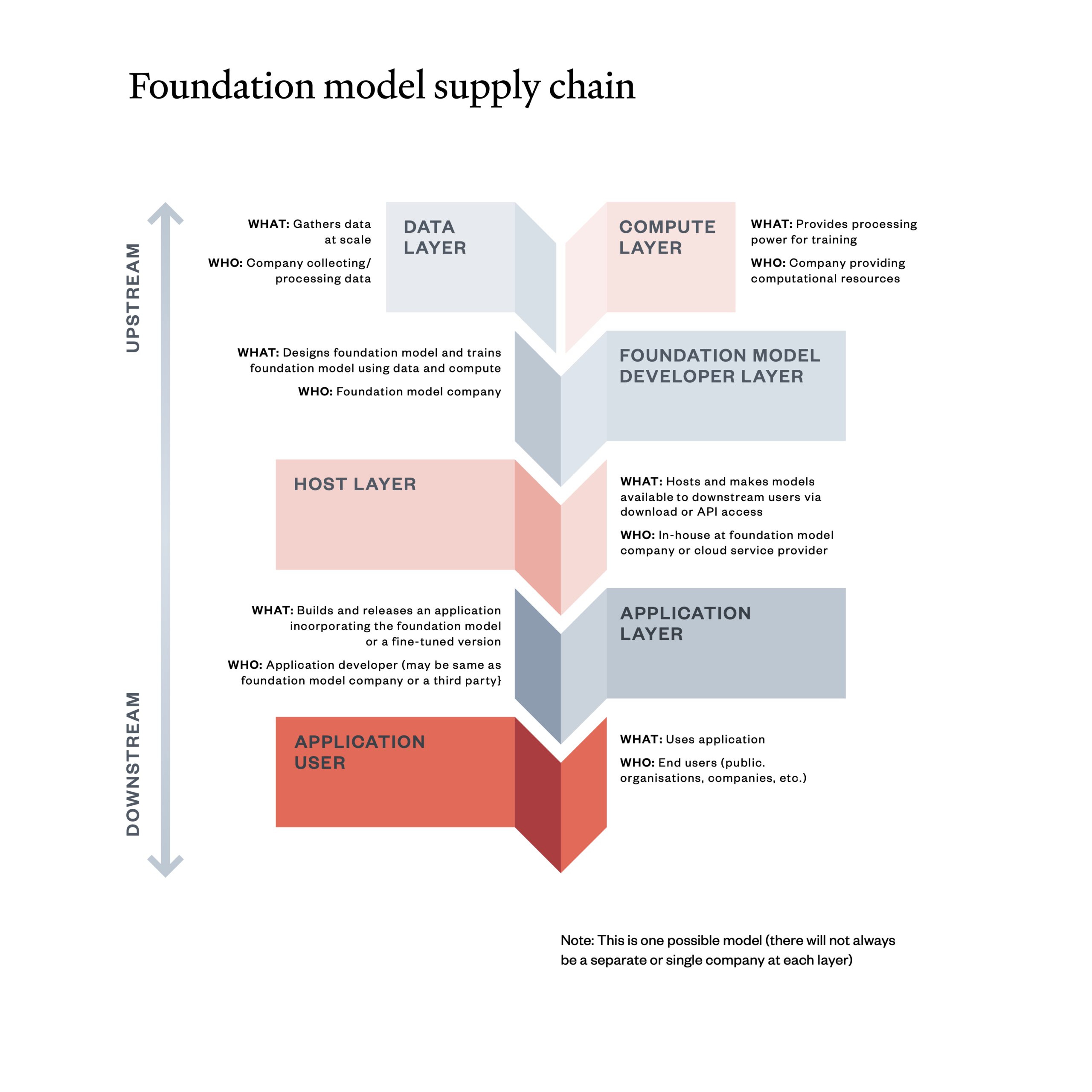

Supply Chain

The following is an example of a supply chain for foundation models:

(Source: Ada Lovelace Institute)

Examples

- Bert

- GPT

- GPT-2

- GPT-3

- GPT-4

- DALL-E 2

- PaLM 2

- Amazon Titan

- Amazon Rekognition

- BLIP-2

- AI21 Jurassic

- Midjourney

- Spark

- Claude

- Claude 3.5 Sonnet

- Claude 3 Opus

- Claude 3 Haiku

- Claude Instant

- Stable Diffusion

- BLOOM